How to Get your Business Recommended by ChatGPT and other AI chatbots

Grab a cup of coffee because we’re getting into hands-on mode in this detailed guode on how to get your business recommended by ChatGPT and other AI chatbots.

If you are a new reader/subscriber and haven’t read the impact of AI in search, I highly encourage you to skim through it for context before you read this.

In any case, here’s a quick summary:

- Google AI Overviews and LLMs like ChatGPT are changing how users find information

- These AI systems can recommend businesses - the new age of SEO

We introduced 4 key pieces of an AI SEO strategy in the AI SEO Ultimate 2025 Guide.

Now it’s time to implement them properly!

So, I’ve divided this newsletter into 3 parts.

Chapter 1 is a detailed action plan with examples

Chapter 2 introduces the “context window” limitations in all AI chatbots, and how to address that

Chapter 3 introduces the concept of llms.txt. For those of you who know robots.txt, this will be interesting.

Let’s dive in!

Chapter 1: Detailed Action Plan with Detailed Examples

Let’s break down each strategy with concrete examples and implementation steps with a fictional AI-powered CRM SaaS business.. because what’s a business nowadays without AI in it?

Topic Universe Mapping - In Practice

Creating a comprehensive map of related topics helps AI systems understand your domain authority. Here’s how to do it effectively:

-

Start with your core products/services: List the 3-5 primary solutions you offer

-

Expand to related concepts: For each core offering, identify 5-10 related topics

-

Connect the dots: Draw relationships between these topics showing how they relate

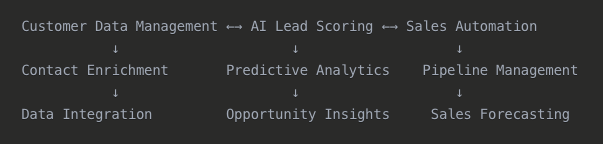

In our case, our AI-powered CRM SaaS company might have this simplified map:

This interconnected approach creates a semantic web that mirrors how AI systems understand relationships.

Semantic Relationship Building - Concrete Techniques

Beyond just creating related content, you need to make the connections explicit:

Internal linking with semantic anchors:

Don’t just link to “read more” – use descriptive text that explains the relationship between topics.

Before: “Our AI lead scoring helps sales teams prioritize opportunities. Learn more.”

Better example: “Our AI lead scoring combines engagement metrics with historical win-rate patterns to create a more accurate forecast than traditional methods.”

Or: “Sales teams using AI-guided pipeline prioritization typically see a 27% reduction in sales cycle length compared to those relying on gut instinct alone.”

These semantic anchors explain WHY the linked content matters and HOW it relates to the current topic, giving both users and AI systems clear context for the relationship.

Here’s a before/after example of semantic linking:

Before: “For more information on AI lead scoring, click here.”

After: “Understanding AI lead scoring is essential for improving your sales forecast accuracy because properly scored leads enable sales teams to prioritize high-value opportunities and optimize resource allocation across your pipeline.”

This explicit connection helps AI understand not just that the topics are related, but how they’re related.

Use schema markup: Implement Article, FAQPage, and HowTo schemas to clarify content relationships.

Create hub pages: Develop comprehensive resource pages that connect multiple related pieces of content.

User Journey Content Development

Content!? Yes, content!

I know I’ve talked about SEO rankings dropping and such, but we’re here trying to get Gemini and other AI LLMs to talk about our business right? So the only way they can do it there’s publicly available content from your website to be trained on whenever they get trained.

Now, different users are at different stages, and your content needs to address all of them. Just like before. These are:

- Awareness stage: Educational content explaining concepts

- Consideration stage: Comparative analysis and practical applications

- Decision stage: Specific solutions and implementation guides

For example, take “AI lead scoring” and create:

- Awareness: “What is AI lead scoring and why does it transform sales efficiency?”

- Consideration: “5 ways AI lead scoring outperforms traditional methods for B2B sales”

- Decision: “How to implement predictive lead scoring with [Our AI CRM]”

When AI systems see this comprehensive coverage, they’re more likely to reference your business as an authoritative source for the topic.

Information Hierarchy Implementation

AI systems pay attention to how you structure information:

- Use clear H-tags: H1 for main topic, H2 for subtopics, H3 for specific points.

- Frontend prominence = backend importance: Information at the top of your pages and in headers signals priority to AI.

- Implement lists and tables: Structured information is easier for AI to parse and reference.

For example, if you’re creating content about email personalization:

<h1>AI-Powered Lead Scoring Strategies</h1>

<p>Introduction paragraph explaining importance...</p>

<h2>1. Behavioral Intent Scoring</h2>

<p>Explanation of this approach...</p>

<h3>Implementation Steps:</h3>

<ul>

<li>Step 1: Activity data collection</li>

<li>Step 2: Behavioral pattern identification</li>

<!-- etc. -->

</ul>No brainer really. This clean structure helps AI systems extract and synthesize your content correctly.

Context Enhancement Strategies

Ambiguity is the enemy of AI comprehension. Ensure your content is extremely clear to read and comprehend.

This sounds simple, but if you’re in an industry that uses a lot of jargon, like marketing, for example, not everyone understands acronyms like CLV, MOFU, TOFU.

And for our CRM example, that’s true for SQL, MQL and so on. Here are some key things to keep in mind:

- Define terms clearly: Don’t assume shared understanding of jargon.

- Use consistent terminology: Pick one term for each concept and stick with it

- Provide examples: Concrete examples help clarify abstract concepts

Compare these two approaches:

Ambiguous: “Our solution leverages cutting-edge AI to optimize your sales process.”

Clear: “Our AI-based CRM software uses machine learning algorithms to analyze prospect interaction patterns and automatically prioritize leads with a 91% accuracy in predicting which opportunities will close within 30 days.”

The second version gives AI systems concrete information they can confidently reference.

Chapter 2: Understanding Context Windows

A context window is the amount of text a chatbot can “see” and process at once. Think of it as the AI’s working memory.

Current context windows vary widely:

| Chatbot/Model | Context Window Size | Info on search integration |

|---|---|---|

| Google Gemini 1.5/2.5 | 1,000,000 tokens | Deep web search, file, and media support |

| Perplexity AI | 1,000,000 tokens | Web search, file uploads, free for signed-in users |

| OpenAI GPT-4.1 | 1,000,000 tokens | Web browsing available in ChatGPT Plus |

| Meta Llama 4 Maverick | 1,000,000 tokens | Multimodal, enterprise search |

| Anthropic Claude 4 (Opus/Sonnet) | 200,000 tokens | Web search, document analysis |

| OpenAI GPT-4o | 128,000 tokens | Web browsing, file analysis |

| Mistral Large 2, DeepSeek R1/V3 | 128,000 tokens | Vision-language, advanced search |

| Meta Llama 4 Scout | 10,000,000 tokens | Specialized, on-device, deep search |

| Magic.dev LTM-2-Mini | 100,000,000 tokens | Extreme long-context, code/data analysis |

Why does this matter? For offline chats, this does not because the chatbot has already been trained on data.

However, as when they search the web for answers, they’re going through many many pages that answer a query, sythesize and summarize it for the user asking the question. Google’s AI overviews already do this. ChatGPT, Perplexity and Claude have all been updated with Web Search.

So, say if someone has been talking to a chatbot for a while discussing CRMs and there is only so much memory that a Chatbot can hold and process. Although this is getting better with every iteration, the key thing to know here is any AI can only reference what it finds useful on its passthrough.

If your headings do not contain what the chatbot is looking for, it moves on to the next website, because they are not going to read the full content of your website - this makes the chatbot slow.

So, in other words, if your key information is buried deep in verbose content, and never referenced in a heading anywhere, it will never be picked up by AI. Here are steps to take to ensure your content is picked up by an AI:

- Front-load important information: Put your most valuable content at the beginning of pages.

- Create concise, dedicated pages: Topic-focused pages are more likely to be fully ingested.

- Use clear metadata: Title tags, meta descriptions, and headings signal importance

For example, if you have a 10,000-word white paper on AI-driven sales forecasting:

Before: A single massive page with information scattered throughout

After: A hub page with clearly labeled sections and downloadable components, each focused on a specific sub-topic.

In our CRM example, that would be: data collection, model training, forecast accuracy, and so on, with key points clearly highlighted at the top.

In summary, keep it short and simple. Put the most important information at the top. Break down long content into shorter ones that focus on one topic.

Chapter 3: Beyond robots.txt - The llms.txt File

Introducing llms.txt

I found this via a YouTube video which I have since lost. It talked about a new proposed standard from llmstxt.org which directly aims to address the context window issue we discussed in part 2.

Most of the SEO consultants I refer to have said they don’t know if this actually makes a difference. But they’re deploying it anyway.

While robots.txt tells search engines what not to crawl, the new llms.txt standard is designed specifically to help LLMs understand and navigate your website efficiently.

The llms.txt file provides a concise, structured way to present your most important content in LLM-friendly Markdown format.

We SEOs used to do it for crawlers, now we’re doing it for LLMs. Call this AI Optimization.

How to implement llms.txt on your website

Here’s how to create an effective llms.txt file with an example using our AI CRM company:

First, we create a markdown file called “llms.txt” in our website’s root directory

Follow this specific structure:

- H1 header with your company name.

- Blockquote with a brief company description.

- Optional detailed sections.

- H2 headers for content categories with markdown lists of links

Here’s how it looks:

markdown

# AI CRM

> AI CRM is an AI-powered customer relationship management platform that uses machine learning to optimize sales processes through predictive lead scoring, opportunity insights, and sales forecasting.

Our platform helps sales teams prioritize leads with 91% accuracy in predicting which opportunities will close within 30 days.

## Core Features

- [AI Lead Scoring](https://aicrm.com/lead-scoring.html.md): Our proprietary algorithm evaluates prospect engagement patterns and assigns accurate conversion probability scores.

- [Sales Forecasting](https://aicrm.com/forecasting.html.md): Machine learning models analyze historical data to predict revenue with 87% accuracy.

- [Pipeline Management](https://aicrm.com/pipeline.html.md): Visual workflow tools with AI-powered insights for opportunity advancement.

## Use Cases

- [Enterprise Sales Teams](https://aicrm.com/enterprise.html.md): How Fortune 500 companies leverage our platform for complex B2B sales cycles.

- [SMB Implementation](https://aicrm.com/smb.html.md): Streamlined deployment for small teams without dedicated sales operations staff.

## Optional

- [Technical Documentation](https://aicrm.com/api.html.md): API reference and integration guides.

- [Case Studies](https://aicrm.com/cases.html.md): Detailed ROI analysis from customer implementations.The key change here is that for each linked page (like /lead-scoring.html), you also create a clean markdown version at the same URL with .md appended (/lead-scoring.html.md). This gives LLMs access to your content without the navigation, JavaScript, and other web elements that make parsing difficult.

Usually, websites don’t automatically serve markdown content. This is how to implement it:

- Using a Plugin: If you’re on, say, Wordpress, look for a plugin that can automatically generate markdown versions of your posts and pages. Some SEO and documentation plugins have this functionality built-in or as an extension.

- Custom Function: Add a custom function to your WordPress theme’s functions.php file or as a simple plugin that detects when a URL with “.md” is requested and serves a markdown version of the corresponding page.

- External Service: Use a service like Cloudflare Workers or similar edge computing platform to intercept requests ending in “.md” and transform your HTML content to markdown on-the-fly.

- Static Generation: If you rebuild your site regularly, you could add a script to your build process that creates markdown versions of all your content and places them in the appropriate locations.

- WordPress REST API: Alternatively, you could create a custom endpoint in the WordPress REST API that serves markdown versions of your content when requested.

But having said the above, at this point, please take this suggestion with a grain of salt. I have seen my client’s websites show up in Google AI overviews and ChatGPT without an LLMs.txt.

Most of the chatbots already have advanced scraping mechanisms built in, but they still cannot render JavaScript. So, if your website relies heavily on JavaScript rendering, then it would make sense to use LLMs.txt so the content can be easily parsed when these bots come crawling.

There’s actually a directory of all the businesses using LLMs.txt - this maybe useful to you to see if there’s a competitor or a business that is in the same industry as you.

Putting It All Together: Your 30-day AI Visibility Action Plan

To implement these strategies effectively, follow this 30-day plan:

Week 1: Analysis & Planning:

- Map your topic universe

- Audit existing content for AI-friendliness

- Identify high-priority content to optimize first

Week 2: Structure & Hierarchy:

- Reorganize key pages for better information hierarchy

- Implement schema markup on priority content

- Create your schema.org dataset file

Week 3: Content Enhancement:

- Clarify ambiguous content

- Strengthen semantic relationships between topics

- Front-load key information for context window optimization

Week 4: Monitoring & Refinement:

- Test chatbot responses to queries in your domain

- Identify gaps in AI’s understanding of your business

- Refine your approach based on what’s working

Remember, this isn’t a one-time project. As AI systems evolve, your strategy will need to adapt.

At Get Strategiq, we focus on AI SEO Optimization and content marketing. If you’re looking to check how ready your website is for the AI search era, we recommend starting with an AI Search Readiness Audit.

For businesses that are looking to kick-start their AI presence, we offer a 90-day AI Citation Sprint that has a strategic focus on optimizing your website’s infrastructure, content and also add new content to build your authority and get cited by AI in 90 days.

If you have any questions on AI SEO, feel free to send us a message and we’ll get back to you asap.